Raspberry Pi 5 handles image processing very differently from its predecessors. Our new computer introduces our hardware ISP (image signal processor), developed here at Raspberry Pi and built partly into our chip RP1, but mostly into Raspberry Pi 5‘s application processor, Broadcom BCM2712.

In the video below, Eben talks with Senior Principal Software Engineers David Plowman, Naush Patuck, and Nick Hollinghurst about the new Raspberry Pi ISP, as well as discussing image processing on previous platforms and what we do to raw camera data in order to turn it into an image fit for humans to look at.

We’re really excited about the opportunities that the ISP opens up. David puts it perfectly:

… better this, better that, more output formats, you know, just more of everything, that’s better, on everything. This is all great. But actually, the most exciting thing is that it’s our platform, and we get to develop it going forwards.

Click here to read a transcript of David, Naush, Nick, and Eben’s video about image processing.

As usual, this is a machine transcription with editing by a human, and if you notice anything we’ve got wrong, you can let us know in the comments below if you like.

Eben 0:08: I guess one of the biggest differences, one of the biggest changes, between Raspberry Pi 4 and its predecessors and Raspberry Pi 5 is imaging, is how we deal with data that comes from the camera. Now we’ve been doing cameras at Raspberry Pi since about 2013, I think we had a five-megapixel OmniVision product, which was actually the very first official accessory for the Raspberry Pi. Back in those days, how did we deal with data that came in from a camera?

David 0:36: Well back then — It’s important to understand that these are what’s called raw sensors. So you get sort of a very basic form of pixel data from it; it’s not sort of beautiful, nice images that you can just sort of throw straight on the screen. So they need a lot of processing to make them look nice. So we get these very raw numbers, they come off the image sensor —

Eben 0:59: And these are red, green, and blue values, for every pixel?

David 1:01: Not for every pixel, no, no; in fact, every pixel actually only has a single colour, in fact, and then part of the processing that we have to do is to, we would say, interpolate the missing colours, you could say invent, perhaps, or make up, the missing colours. So that’s one of the things that happens. But anyway — so you get these pixels coming off the sensor, they come down on the Raspberry Pi, people will know the flat ribbon cables that we have. So they’ll come down the ribbon cable.

And there’s a hardware block on the Pi that receives them — that’s called the receiver — and which just dumps them into memory, is all that happens. They just get dumped straight into memory. And then everything else happens, actually, on the on the main chip itself. And there’s this piece of hardware, which is called an image signal processor, often abbreviated as an ISP, so nothing to do with internet service providers —

Eben 1:55: I’m super excited to learn it isn’t image sensor pipeline, which is what I was kind of —

David 1:59: Well, it used to be. It’s changed names. I think most people — Yeah, it’s had various versions of the abbreviation but I think most people now call it an image signal processor, I would say. So there’s one of those actually on the Raspberry Pi chip.

Eben 2:13: And this reads these “bad” images. So these cameras: they’re quite cost-engineered, aren’t they? So both the optics and the sensor itself?

David 2:26: Yes, that’s right. Yeah. So the, as we’ve said, the pixels that we have in memory, at this point, are quite ugly things. I mean, they’re dark, they’re gloomy, they’re all mostly green, it’s just a bit of a mess, really. So you can’t use those for anything, really. And then there’s this thing called the image signal processor, or the ISP, that reads everything back from memory, where it’s been stored, when it arrived from the sensor; it processes them; it does lots of stuff to them. We can talk more about that in a minute, I guess. Does lots of stuff to them, and makes a nice picture. And then writes that back out to memory; that’s basically what happens. Yeah, so it’s just dealing with processing the pixels into a nice output image. There’s no image encoding, so it’s got nothing to do with image encoders or JPEGs or anything like that; that’s all a later stage.

Eben 3:14: So this is RGB data coming in from the camera.

Naush 3:20: It’s called Bayer data.

Eben 3:21: Bayer data: what’s Bayer data?

Naush 3:23: It’s one red, one blue, and two green pixels —

Eben 3:28: For each little two-by-two. So when you say each pixel only has a single colour, that’s what you mean. It’s either a red, a green, or a blue. And there are twice as many greens as there are the other colours. So this data’s coming in as Bayer data, which is this kind of sparse RGB data. It’s going through the pipeline; it comes out as RGB data, or does it come out as something else?

Naush 3:57: RGB or YUV.

Eben 3:58: Okay. And so YUV is another colour space, it’s this intensity: rather than just saying “this much red, this much green, this much blue”, it’s kind of “this much brightness, and this much colour”.

Naush 4:08: Colour difference.

Eben 4:09: Colour difference, yeah.

David 4:10: It’s a different colour encoding, as people would say.

Nick 4:13: It’s optimised for video encoding.

Eben 4:16: Right. And that’s then the encoding of something like video code or JPEG uses… okay. So if you’re gonna take your data and store it, encode it and store it, it’s more convenient to have it as YUV than RGB.

Naush 4:30: And YUV allows you to compress the — well not compress, but remove some of the colour samples. Because —

Eben 4:39: And why is it safe to do that?

Naush 4:40: Because your eyes are more sensitive to luminance changes than colour changes.

Eben 4:44: This is rods and cones density.

David 4:47: I mean, you could argue it’s not terribly safe to do it, but we do it anyway. I mean, it does create problems. I mean, that’s one of the reasons why, you know, when you see people with highly stripy shirts on TV, sometimes you get some funny colours appearing, and that’s because they actually throw away the colour data, right? That’s what happens.

Eben 5:03: So that’s the classic world where you have [BCM]2835 on a Raspberry Pi 1, [BCM]2711 on a Raspberry Pi 4, and all of that’s integrated into one chip. So you have the receiver, you have the block that writes data from the receiver to memory —that’s called Unicam in the old world, right? And then you have the ISP, the image signal processor, which is a memory-to-memory bus master, which is pulling those Bayer images in, doing stuff, and then writing either non-sparse RGB or YUV, or non-sparse YUV or sub-sampled YUV, back to memory. So we should probably talk a little bit about the stuff. What did stuff consist of classically? Obviously, one of them would be Debayer, right?

Naush 5:52: So yeah, statistics, black level correction, Debayer as you said…

Nick 6:00: We generally start off trying to fix defects in the image by trying to reduce the noise, and to spot defective pixels. Although actually sensors usually do quite a good job of hiding defective pixels themselves, because the sensor manufacturer knows what kind of defects are likely to be present.

Eben 6:17: Right. And so you may just, in a 12-megapixel sensor, you may just have some pixels which are dead.

Nick 6:24: Yes. And you just replace those with a neighbouring signal. So really, the first thing we try to do is get rid of noise, by smoothing where the image looks like it ought to be smooth.

Eben 6:38: Right. Okay. And what sort of noise is this? This is thermal noise, or…?

Nick 6:44: There’s thermal noise and quantisation noise in the electronics. There’s also shot noise due to the fact that the photons arrive at random times.

Eben 6:56: So you’re trying to build some model of what you think the signal should look like, and then kind of push the signal towards what it should be like.

Nick 7:08: Yes. So there are different ways of doing it, but the general approach is, where the signal looks like it’s smooth up to a certain degree of noise, you make it more smooth. And where it looks like there are sharp edges, you kind of preserve it.

Eben 7:20: Right. And that’s spatial. So that’s spatial denoise. Okay. So you’ve done some spatial denoise, then what? You’ve got distortion correction in there somewhere?

David 7:31: Well, yeah, I mean, I was gonna take a step back, actually, and just — they’re kind of — to my mind, there are two kinds of things that we tend to do. There’s the sort of large-scale processing that we do to images: so this is about getting the colours right, getting the gamma curves right, so the thing basically looks like a nice image. And you can kind of think about a lot of that without worrying about the actual pixel-level detail, and all that kind of stuff. But then that’s the other thing you have to do, obviously, you have to get the pixel-level stuff sorted out as well. So this is always very sort of fine-grained, it’s to do with the denoising that we’ve talked about, it’s to do with the sharpening, it’s to do with Debayering as well, all those kinds of things. So there’s very much these two things going on.

Eben 8:09: And you have a pipeline of these stages, and you’re passing… you’re passing pixels down this, and it gradually becomes — you start off with this Bayer, you know, extremely… incorrect… data. And at the end of it, you hope to have something which, I guess, is true, for some value of true; is the closest you can get to the ground truth, the actual state of the world that generated the…

David 8:43: Yes. I mean, I’ll go on to say that a lot of the sort of corrections that we do — so Nick talked about the defective pixel correction, the denoising, all that kind of stuff — you try and do it early, because when the pixel numbers have come off the sensor, you have some expectation as to what statistical distributions they follow and this kind of stuff, you probably calibrate and measure them. So you kind of do stuff with them. As soon as you’ve started touching the pixels and doing stuff with them, all that starts to go away, so you kind of can’t do that anymore. You can’t denoise as effectively later in the pipeline, because you’ve done all kinds of stuff to the numbers, so you’ve kind of got no idea really what you’re looking at any more. So a lot of that sort of stuff happens early.

Eben 9:18: So there’s a natural order.

David 9:19: Yeah, there is a fairly natural order for a lot of it, that you try and do a lot of that sort of stuff early, you fix up the problems. And then you’ve got to sort out the colours, you know, the transfer function, sharpen it up a bit, all that kind of stuff.

Nick 9:30: So the big sort of milestone in the pipeline is demosaic, where you’re changing — before then you have the Bayer order data and after then you have RGB.

Naush 9:38: And after that, you also have to gamma, which then removes more linearity from the pixels.

Eben 9:47: So that’s your kind of classic ISP pipeline, as a memory-to-memory master. You then have algorithms that kind of run around the outside of this: there’s sort of… 3A stuff? Is that right? So these are your auto algorithms —

Naush 10:11: Auto white balance, auto exposure gain, and autofocus. But these days we do another one; we call it 4A, don’t we.

David 10:20: Yeah. So I mean, the way to think of it really is that every block in the hardware, we have an algorithm associated with it, pretty much — that controls it. Some algorithms control more than one block. So a typical one would be, we talked about the auto exposure and gain algorithm. So obviously that would control — well that controls the sensor, but it also controls the gain block in the ISP. And then other blocks, like there’s a block that applies colour gain: that again has an algorithm that controls it; happens to be called the auto white balance algorithm. So every one of these algorithms —

Eben 10:55: And your auto white balance algorithm is — so your automatic gain control, automatic exposure algorithm is trying to avoid the image being blown out. If you walk from inside to outside, something’s going to happen that causes this outer set of algorithms to say, aaaagh, too much light, dial down some gains. And if you walk back inside you go, well this image looks almost black, let’s dial the gains up a little bit.

Similarly, with the colour, I mean, I saw an amazing — the New York thing, I saw a really wonderful thing recently where somebody, you know, they had the orange sky or that dust that had come. And there was somebody illustrating that smartphone pictures of it weren’t really capturing how incredibly orange it was, and they took one of the colour charts outside and then calibrated the — because the auto white balance was trying to make the, it was saying, well this image is too orange, let’s make it less orange. And then when you take a colour chart out and say, “that square there is white”, you drive your auto white balance for that square, suddenly, you see how incredibly orange the place really was.

David 11:51: Yeah, I mean, that kind of thing is actually classic, because — also white balance is kind of an impossible algorithm. Because when you’re looking at a white wall under a kind of yellowish light, you can’t tell it’s not a yellow wall under a white light; you have no, you know. So it’s a sort of — it has statistical properties, you have to look at the statistics and likelihoods of the things that you’re seeing and determine what you think is most plausible. And so the upshot of that is, of course, that white balance algorithms tend to be calibrated against typical scenes, so that you assume kind of normal-ish behaviour of things. And so when you get exceptional circumstances, they get caught out. So that’s exactly it. And the trick is to, you know, cover as many of the outlying circumstances as you can, without messing up the common ones as well.

Eben 12:34: And then the other classic algorithm is autofocus, where you have one of several methods — I know with Camera Module 3, we’ve got several methods, including the phase-detect method for going, hang on, yeah, this image is — Yeah, this image is out of focus, drive the focus actuator backwards and forwards.

David 12:51: Yeah, so the Camera Module 3 uses phase-detect autofocus, I dunno if Nick can tell us about that, but it’s a particularly cool method, actually, of doing autofocus.

Nick 12:59: The nice thing about it is you can’t — as well as telling you whether it’s in focus or not, it tells you which direction you have to move the lens to get it in focus.

Eben 13:08: Because there are simpler contrast-detect autofocuses, aren’t there, which try to maximise — when it’s out of focus it’s blurry, so if you make the image have more high frequency, you —

Nick 13:17: You have to search for the lens position that makes it least blurry.

David 13:20: Yeah, and that’s very difficult because you have no, you have no definite knowledge that it’s now out of focus, there’s something wrong, all you can do in that kind of world is just, well, the image has vaguely changed a bit, maybe I need to do another autofocus kind of search, and you don’t know whether to go forwards or backwards. So you get this kind of hunting behaviour. And it’s very hard to calibrate it to hunt for focus only when it really needs to, rather — it is, basically it’s impossible, it’s —

Nick 13:46: — the phase-detectors.

David 13:48: But the phase-detector nails all that for you. It’s a — you know, that’s why it’s very common now, on all the cameras.

Eben 13:54: So you’ve got ISP in the middle, you’ve got a receiver, you’ve got a Unicam receiver, ISP, algorithms that are inside the ISP; we talk about tuning sometimes. What is tuning?

David 14:05: it’s all the characterisation of a particular camera, so it’s everything from the… you calibrate what kind of noise you get from the sensor, so that the hardware block can be told what kind of levels of noise it has to try and flatten; you calibrate the colour response of the camera, so that it knows how to turn the camera’s version of colours into proper true colours that you would recognise…

Nick 14:30: Lens shading?

Naush 14:31: Yes, shading correction, yeah —

David 14:31: Yeah, so vignetting and colour shading, very common across lenses, all that.

Naush 14:36: Gamma, how we shift the luminance in the image to make it look nicer.

Eben 14:42: So you end up — so the flow there is you get a sensor or some set of sensors, some representative sample of sensors from the manufacturer, and then you take pictures of some known stuff. And you derive from that a big table of numbers which constitutes the tune.

David 14:59: Yes.

Eben 15:00: And that’s changed a little bit, hasn’t it, over the course — between the old world, there’s not just an old hardware world and a new hardware world, there’s an old software world and a new software world, right. So the libcamera world tuning is a little bit different.

Naush 15:14: It’s a lot simpler, a lot less numbers to deal with.

Eben 15:17: So there’s a lot of configurability in the classic ISP, and you’ve found ways to really, kind of, trim — slim back down to just the meaningful configurations.

David 15:24: There’s various things we’ve done. I mean, in the old world, again, you know, we had lots of different customers, and they were — tended to be often quite big customers. And when they wanted a particular feature, you would give them the particular feature they wanted. But it sort of meant we had piles and piles of features for every customer we’d ever worked with, they wanted particular things. So this thing filled up with numbers like crazy, really — made it very hard to create — and this is one of the reasons why the, you know, the HQ Cam took so long to roll out because, you know, my goodness, someone had to make this tuning file up, and it was just a nightmare.

Naush 15:55: That was me!

David 15:56: That was you, that was Naush! So that was really hard. So we were very keen —

Eben 15:59: Muggins!

David 16:00: Yeah! So we were very keen to drop all that stuff that we thought just wasn’t really useful, generally speaking.

Naush 16:07: Most of those numbers you wouldn’t touch.

David 16:08: Yeah. And you know, to some extent in the new, open world, yeah, when people want these kinds of particular behaviours, they can put them in. The code’s there, they can do it. So we didn’t feel we had to put everything in off the bat. But then there was also a real wish to make the whole thing just a darn sight simpler, so that we could turn a new camera around in a, you know, a few days or something rather than like months every time.

Eben 16:32: So you had these big tuning campaigns for OV5647 which was the Camera Module 1, IMX219 for Camera Module 2, [IMX]477 for HQ Cam. And then the libcamera world appears, and subsequent cameras; so you then went back and retuned those, tuned those old cameras in the new world? Yeah. So you can still use the Camera Module 1 in the libcamera world.

Naush 16:58: That’s right.

Eben 16:59: But subsequent cameras are only tuned —

Naush 17:01: And we think it looks better.

David 17:01: It mostly looks better. And we have a much better handle on what’s actually going on inside in there.

Eben 17:07: I’ve seen some really nice Camera Module 1 pictures recently, that are materially better than I would historically have thought Camera Module 1 could do.

David 17:17: Yeah, I think we do do better even on the existing, the old platform, where actually the tunings that we have are better, but just in part because they’re less complicated, less of a nightmare to make. It means we can spend time just getting the basic things in order. And it’s a much better place to be.

Eben 17:33: So that’s the classic world. That’s the classic hardware world. What’s different in Raspberry Pi 5?

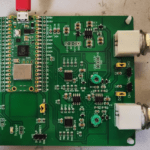

Nick 17:40: I guess the main difference is it’s been split between two chips. So the CSI receiver and part of the ISP are now in the RP1 southbridge. And then most of the ISP is in the main processor, 2712.

Eben 17:55: So you have MIPI — and we have two four-lane — so we’ve grown some —

Nick 17:59: Yes, we have two four-lane MIPI ports that can be either CSI 2 or DSI, or one of each.

Eben 18:06: And we have a little bit of a challenge finding four-lane, there aren’t a huge number of four-lane sensors, obviously, but…

Nick 18:10: All our standard cameras are still two-lane, but…

David 18:13: But the door is open to start doing four-lane ones —

Eben 18:15: And there are some super high-resolution four-lanes —

Naush 18:17: And we’d love to do it.

Nick 18:18: That would give us much higher resolution and higher frame rates.

David 18:22: It’d be great to do, I mean, the HQ Cam, in theory, will do four lane…

Naush 18:25: HQ Camera can do four lanes, yeah.

David 18:26: I mean, there’s a lot of slog, we’d need a board that has four lanes, we’d need a driver that supports four — all these things that we don’t have now. But the door is open to doing these things, and then we could just drive one of these things to 12 megapixels —

Eben 18:36: Or two. Or two of these —

David 18:37: 12 megapixels, 30 frames a second, and there you are.

Eben 18:40: So you’ve got, so you have two camera connectors; each of them is twice as wide as before. As you say, these are not connected to the main chip.

Nick 18:51: They’re on RP1, yes. And then co-located on RP1 with each of these MIPI interfaces is what we call the ISP front end. Which doesn’t do a great deal; one of the significant things it does is get statistics early on the raw image coming in. So we don’t have to rely on feedback from the previous image. We’ve got more data up front.

Eben 19:12: Does this make some of these 3A, 4A algorithms easier? Is that what this is?

Nick 19:19: Yes, I mean, we can converge a bit faster.

Naush 19:21: It removes a bit of the latency.

Eben 19:23: So you’re doing some early processing, a little bit of defective pixel work…?

Nick 19:28: There’s defective pixel correction. I don’t think we’re using it at the moment; as I say, the sensor manufacturers tend to do a good job, but it’s there if we need it. That’s really there to protect the statistics. And there’s also optional downsampling in the front end to reduce the memory bandwidth.

David 19:43: It’s probably more to protect the downsampling actually, isn’t it, I mean…

Nick 19:48: Once you start messing with the image, then the pixels become correlated and it’s harder to do defective pixel correction.

Naush 19:53: One of the things we do in the front end is we also compress the Bayer data.

Nick 19:56: And we have the compression scheme.

Eben 19:58: And that’s a lossy, slightly lossy —

Nick 20:01: It is slightly lossy, it’s ten or twelve bits down to eight bits.

Eben 20:04: Right. So it’s only a little bit of loss.

Naush 20:06: You couldn’t even notice it.

Nick 20:06: It’s been carefully designed for our use case to, to try and make it so you can’t see the loss.

Eben 20:13: Because we have a relatively — well, we have probably what, in my mind, is a lot of bandwidth over PCI Express; we have two gigabytes a second of bandwidth over PCI Express. But this is in a platform that has 16 or 17 gigabytes a second.

Naush 20:24: But it’s also on the other end. So when you’re reading in from the back end ISP, you’re reading in less…

David 20:34: Yeah, I mean, for everyone listening, it’s worth putting out there: it’s optional, the compression, so you can turn it off.

Eben 20:39: Is it turned on? By default?

David 20:39: We do mostly turn it on. But it’s optional: you can turn it off and not have it.

Eben 20:45: But if you want to be a — so, in the future, where we potentially do have a pair of four-lane high-resolution cameras connected, of course, yeah —

Nick 20:52: Yes, then we’d appreciate having compression.

Eben 20:54: That’ll be very helpful, right. So that stuff is in RP1. So you’ve then got…

Nick 20:58: Statistics, compression, and a bit of downscaling and defective pixel correction. And then 2712, we’ve got most of the ISP.

Eben 21:10: Which, again, is a memory-to-memory master. So it’s now reading the potentially compressed, somewhat touched-up data that’s come in over PCI Express from RP1, doing stuff to it, and getting nice pictures out the back. What stuff? How’s the stuff different? So a lot of the stuff is — the overall shape of the stuff is quite similar, right?

David 21:33: A lot of it is very similar. I mean, one big difference to start with, it’s got much higher throughput. And that’s a huge change. I mean, it’s clocked higher and it runs at two pixels per clock. So, I mean, this —

Eben 21:46: I think it’s clocked at something like 800 megahertz. So you can get 1.6 billion pixels a second.

David 21:50: Yeah. I mean, this thing will do dual 4Kp60 in its sleep virtually. It really is very capable.

Eben 21:56: We’ve gone from, sort of, single pixel per clock, 500 megahertz, to two pixel per clock, 800 megahertz. So it’s over a 3x uplift.

David 22:06: Yes, that’s right. Yeah. So in reality, the old ISP, you — Well, you’ve got two or three hundred megapixels a second, three with a bit of luck, would you say?

Naush 22:15: Yeah, you take — you remove about, you have an overhead of about 30, 40 per cent. Because of the overlaps on the tiles. And similar here as well, but not as much overlap, actually, in the new world. But yeah, so you’d get about 200, 250 megahertz —

David 22:34: But, as I say, now, it’s vastly greater. So throughput is a really big difference.

Eben 22:41: In terms of algorithms, some of it’s better versions of the same thing, right? Better Debayering, better spatial noise…

David 22:49: Yeah, I would say the spatial denoise is certainly better, it’s got a wider, the filters have wider support, so you get better performance that way.

Eben 22:58: What’s actually — ?

Nick 22:59: Temporal denoise?

Eben 22:59: Ah, temporal denoise. So that sounds like the big — So when we talked about denoise, we talked about spatial denoise, about looking at a pixel and looking at its neighbours and going yeah, that doesn’t look right, that’s a smooth — there’s some smooth change that I can see across my filter support, I can see some smooth change. And this pixel is sticking out above that smooth change, it’s probably not a real edge, it’s probably shot noise or thermal noise. So that’s spatial denoise. But now we have temporal denoise, right?

David 23:27: We do. So there’s fully hardware temporal denoise. So what this does is it compares every frame that arrives with kind of the previous frame, effectively. And if there — where they look like they’re the same, it averages them together in some way. But this is a long-term process, if you like, so all the while it’s averaging frames to create what we call the long-term average frame. So this is kind of like the denoised, the temporally denoised version of the frame. And then every time a new one comes in, we compare it with that. And we kind of merge them together. And that’s the new long-term average frame. And that’s what then goes forward down the rest of the pipeline.

Eben 24:01: So, looking behind me, at this blank space behind me, if, in a video, there’s variation from frame — significant variation from frame to frame in that colour, it’s obviously wrong, right, because it’s a static thing, it’s not changing. And that’s what temporal denoise tries to work out.

David 24:18: Yes. It will really clean that sort of stuff up. So you get much lower noise videos, you get to spend much more of your — your bit rate for your encoders, you actually spend encoding the detail and not the noise. So you get much better video quality as a result. So temporal denoise is a really good feature, actually, to have. Things get much cleaner. And so that’s nice.

Naush 24:41: It makes a tremendous difference.

Eben 24:42: So that’s — is that the big ticket in terms of things which are qualitatively different about this ISP?

David 24:52: Yeah; well, yeah —

Naush 24:53: That’s one of the big things, yeah, definitely, one of them.

David 24:54: One of the things

Nick 24:55: HDR is another one.

Naush 24:57: HDR’s another one, yeah.

David 24:58: HDR’s a thing we’ve been looking at, yeah. So, again, there’s various ways you can drive that. You can —

Eben 25:03: Sorry, what’s HDR?

David 25:04: So, High Dynamic Range imaging. So, normally what this means is you have to have several images, and you have to combine them together in some way. So —

Nick 25:13: Exposure bracketing.

David 25:15: Yeah, it’s a bit like exposure bracketing. So you get both the dark areas, you can bring them up, and also the highlights, you can avoid them blowing out and try to bring them down.

Eben 25:22: So it’s the classic room with a window. Badly lit room with a window. You want to somehow have, simultaneously have not much gain so you can see the tree out of the window and the window doesn’t doesn’t just blow out to white, but also quite a lot of gain inside the room, so you can actually see things in the room…

Naush 25:40: And our Camera Module 3 does this on-chip. But now we’re going to — we are going to try and get the ISP to do it for the other sensors as well. Or any sensor.

David 25:49: Yeah, so that is quite fun as well. And there’s various different ways we can drive the the HDR processing — it —

Eben 25:57: So is this a commitment to bring HDR to HQ Cam then?

David 26:00: Well, I mean — well —

Eben 26:02: At least for static, at least for relatively static scenes.

David 26:04: Well, yeah. So — okay, it’s all complicated. There are two kinds of HDR that we’re doing at the moment. One of which is where we basically run the camera underexposed, and combine all the frames that come in. We basically add them up and average them so it’s like we had both short exposures and long exposures. And we kind of munge all those together. And then that goes forward and goes through the tone-mapping process. So actually that’s quite a nice form of HDR; it’s very good for video, actually —

Naush 26:33: Yeah, because you don’t suffer motion artefacts.

David 26:35: We don’t suffer motion artefacts, because basically it relies on temporal denoise to basically add things up, is kind of what it does.

Eben 26:43: So how does it get rid of motion, then, if…? Global motion or local motion —

David 26:47: Yeah, so what happens is that cause temporal denoise averages where things are the same, and where things aren’t the same, it just takes the most recent frame —

Eben 26:55: Oh!

David 26:55: — it’s just the right — so, kind of — things are moving —

Eben 26:57: So you kind of lose your HDR for moving objects.

David 26:59: Well, no, so you have your HDR, but what happens is you get slight noise halos, if things are moving. So you still get a perfectly reasonable picture, and it’s all good, but where it moved, you get a slight halo where it’s a bit noisier, if you like, but it’s fairly marginal effect. But the rest of it, you know, you can run this whole thing at 60 frames a second or whatever, and it’s quite nice.

Then there’s another form of HDR that we’re doing: this only really works for still scenes at the moment. There’s kind of — a lot of this stuff is, I would say, it’s a bit of a first cut, some of this stuff; there’s a huge amount of new stuff, obviously, regardless of the fact that, you know, the whole thing is new, but there’s lots of new stuff we’ve developed for it as well. And so there’s this other form of HDR, where you actually get long and short frames, and it actually combines those directly together. And that, I would say, is more for still images at the moment. So there’s a slight work-in-progress on that one; it’s not so good on moving images, because the artefacts aren’t compensated so well on that. But yeah, that’s work in progress. So, you know, the next version, whenever that’s going to be, will be perfect.

Eben 27:57: But this is a — so this is — lots of this stuff is there in the system already, and is latent. And we’re kind of opening up —and I think this happens with every major revision of the platform. You know, we talk about — the major revisions of the platform are like steps, like treads on a staircase, and then it’s like, in between, the software work, you kind of pour sand on the staircase, and you get sort of a gradual improvement driven by software. And then this, you know, really what’s happened is this — it’s increased the ceiling on what you might be able to do in software.

David 28:27: Yes, and I think as time goes on, some of these things will be able to do a bit better, particularly if… not always — you know, if you’re doing 4K video, 4Kp60 video, it’s kind of hard to do very much in software, just because there’s so many pixels flying around. But if you’re doing still image capture, actually there’s quite a lot of scope for adding some software processing and that kind of thing, to improve some of these areas that will then improve in hardware again later, on another revision. So there’s a whole kind of path of stuff happening there. You know, because the Arm cores on this thing are —

Eben 28:55: Pretty powerful.

David 28:56: They’re stonkingly good. They really are.

Eben 28:58: I just ran the numbers last night, actually, and I think we have something like, if you compare the GPU on the platform with the CPUs on the platform, you have about 50% — the total floating-point throughput of the Arms is about 76 gigaflops, and the total throughput of the GPU is about 50 gigaflops. So there really is — or mid-40s, even, maybe. So there really is a huge amount of performance available to you there on the Arms.

David 29:25: Yeah. Yeah, I mean, the thing I always come to is, the most exciting thing about this platform — all these things that we’ve got there, whether it’s temporal denoise, and HDR, and better this, better that, more output formats, you know, just more of everything, that’s better, on everything. This is all great. But actually, the most exciting thing is that it’s our platform, and we get to develop it going forwards. I mean, that’s actually the most — that’s the most exciting thing, because up till now, we’ve had a platform, it has never changed. Nothing has ever changed about it. No bug has ever been fixed. No material performance improvement, other than a bit of clock uplift, I suppose. But there’s been nothing, ever. Right?

Eben 30:04: And so this new ISP was developed at Raspberry Pi and then put into the core chip —

David 30:10: Yeah – it is ours, you know, we’re already making plans for the next version of this thing, you know, some of the cool features that we haven’t squeezed into this one, they’re gonna go into — whenever the next one is…

Eben 30:24: Imaging had become an outlier — in terms of the hardware, imaging had become an outlier, that it was the only thing that had not moved forwards once we upgraded to, in the last generation, to VideoCore VI for 3D, and to VideoCore V for the scanout for HVS. It had become the only surviving — well, maybe video encode as well — about the only surviving block in the platform that hadn’t seen some attention since the days of [BCM]2708, [BCM]2835.

David 30:53: Yeah, yeah. So this — it’s hard to exaggerate what a big deal this is: it’s not a step change, it’s a whole new building. It’s just everything is brand new. But now the step changes will go forward on every — every time we can find an occasion to do a new version, we’ll come to you and say: we want to do this.

Eben 31:13: Yeah. Give me a new chip!

David 31:14: Yeah. We want a new chip please!

Eben 31:15: Look, I have RTL, give me a new chip…!

David 31:16: We’ve got new fabulous stuff. So that’s really exciting.

Nick 31:20: That’s very exciting.

Eben 31:21: Excellent, well, I’m looking forward to having to play with it. Thank you, thank you very much.